In the digital age, data is power — and list crawling is one of the most efficient ways to gather that data at scale. The term list crawling refers to the process of automatically extracting structured information from web pages or databases, particularly lists of items such as URLs, products, contacts, or other entities.

List crawling is widely used in SEO, e-commerce, digital marketing, research, and data analysis. It enables businesses and researchers to collect large datasets quickly, saving time and effort compared to manual data entry.

However, while it’s a powerful tool, list crawling must be done responsibly — respecting website policies, privacy rules, and legal regulations.

Defining List Crawling

At its core, list crawling is a subset of web crawling, a process where automated software (known as a “crawler” or “bot”) navigates through web pages to gather specific data.

In this case, the crawler focuses on lists or structured elements — such as:

-

A list of URLs

-

Product listings on an e-commerce site

-

Directory entries (businesses, emails, addresses)

-

Blog titles or links

-

Job postings

These lists are extracted systematically, allowing the user to analyze or repurpose the data for research, indexing, or marketing purposes.

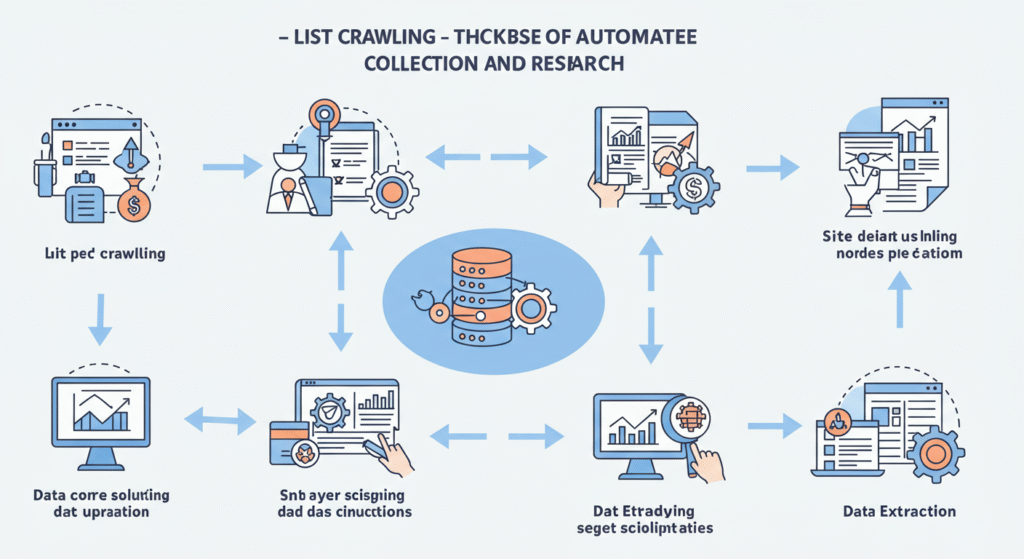

How List Crawling Works

List crawling follows a structured workflow, typically involving five key stages.

1. Seed URL or Source Definition

The crawler begins from a starting point — known as a seed URL — which serves as the entry point into the target site or database.

2. Parsing HTML or API Data

The crawler reads the structure of the webpage, interpreting HTML tags (like <ul>, <li>, <a>, <div>) or using APIs if available. This step identifies patterns in the list layout.

3. Extraction

Based on defined parameters, the crawler extracts data fields — such as titles, links, images, prices, or contact details — and saves them in a structured format (like CSV, JSON, or SQL).

4. Pagination and Navigation

Advanced crawlers automatically detect next-page links or pagination elements, ensuring that the entire list (not just the first page) is collected.

5. Storage and Processing

The extracted data is stored for analysis, indexing, or automation. It can be integrated into other systems like CRMs, SEO tools, or data warehouses.

Examples of List Crawling in Action

1. SEO and Digital Marketing

Marketers use list crawling to gather:

-

Competitors’ backlinks and referring domains

-

Lists of keyword-targeting pages

-

Blog post titles and publication dates

-

Directory or citation links for local SEO

By analyzing these datasets, SEO specialists can identify opportunities for link building, keyword gaps, or competitor strategy insights.

2. E-Commerce

E-commerce companies crawl product lists from marketplaces (like Amazon, eBay, or AliExpress) to:

-

Monitor prices and inventory

-

Analyze competitors’ products

-

Track customer reviews

-

Identify market trends

This data-driven intelligence helps online stores stay competitive and adjust strategies in real time.

3. Academic and Business Research

Researchers use list crawling to collect datasets from:

-

Public directories

-

Scientific publication lists

-

Government databases

-

Financial reports

These lists enable large-scale data analysis for economic studies, social research, and market predictions.

4. Job Market Aggregators

Platforms like Indeed and LinkedIn rely on list crawling or APIs to aggregate job postings from thousands of company websites. This creates comprehensive listings accessible to users in one centralized database.

5. Real Estate and Travel Platforms

Real estate sites use crawling to collect property listings, while travel agencies crawl hotel or flight lists for pricing comparison and availability tracking.

Popular Tools and Frameworks for List Crawling

Several programming tools and frameworks are used for list crawling, ranging from beginner-friendly platforms to advanced open-source libraries.

1. Python Libraries

-

Beautiful Soup: Parses HTML and XML documents for data extraction.

-

Scrapy: A high-performance web crawling framework designed for scalable data extraction.

-

Selenium: Automates web browsers for crawling dynamic pages that rely on JavaScript.

-

Requests: Fetches webpage content programmatically.

2. Browser Extensions

-

Web Scraper.io (Chrome): Visual point-and-click list crawling.

-

Instant Data Scraper: Extracts data tables or lists directly from a webpage.

3. Enterprise Tools

-

Octoparse – GUI-based crawler for non-programmers.

-

Apify – Cloud platform for large-scale web automation.

-

Diffbot – AI-powered crawler that automatically identifies list structures.

These tools can collect structured data efficiently, whether for small-scale SEO research or massive enterprise-level projects.

Key Components of an Effective List Crawler

An efficient list crawler must be:

-

Accurate – Correctly identifies patterns and elements.

-

Scalable – Handles thousands of pages without crashing.

-

Adaptive – Adjusts to dynamic JavaScript and AJAX content.

-

Compliant – Respects website rules and rate limits.

-

Secure – Protects sensitive data and user credentials.

Ethical and Legal Considerations in List Crawling

While list crawling is a common digital practice, it operates within a complex ethical and legal framework.

1. Robots.txt Compliance

Websites specify crawling permissions using a robots.txt file. Responsible crawlers obey these directives to avoid unauthorized access.

2. Copyright and Data Ownership

Even if data is publicly visible, it may still be protected by intellectual property laws. Extracting or redistributing it without permission can raise legal issues.

3. Privacy Concerns

If crawling involves personal data (emails, names, phone numbers), it must comply with privacy laws such as:

-

GDPR (Europe)

-

CCPA (California)

-

PDPA (Singapore)

4. Rate Limiting

Aggressive crawling can overload a server. Ethical crawlers use delay intervals and respect bandwidth limits to minimize disruption.

5. Platform Terms of Service

Many websites explicitly prohibit scraping in their Terms of Service. Violating these can lead to IP bans or legal action.

In summary, ethical list crawling means balancing automation with respect for digital boundaries.

Challenges of List Crawling

While powerful, list crawling comes with several technical and operational challenges:

-

Dynamic Content: Many modern websites use JavaScript frameworks (like React or Angular) that generate lists dynamically, requiring advanced tools to extract data.

-

Anti-Bot Measures: Websites use CAPTCHAs, user-agent detection, and throttling to prevent automated scraping.

-

Data Quality: Poorly structured lists or inconsistent formats can lead to messy datasets.

-

Maintenance: Websites change layouts often, which can break crawler scripts.

-

Scalability: Handling millions of pages requires strong infrastructure and data storage systems.

Best Practices for List Crawling

To ensure efficiency, accuracy, and compliance, follow these crawling best practices:

-

Define Clear Objectives: Identify what data you need (e.g., links, prices, or metadata) before starting.

-

Start Small: Test crawl a few pages before scaling up.

-

Respect Robots.txt and TOS: Always check site permissions.

-

Throttle Requests: Use time delays to prevent server overload.

-

Clean and Validate Data: Use scripts or software to remove duplicates and errors.

-

Automate Responsibly: Avoid collecting sensitive or private data.

-

Use APIs When Available: Many websites provide legitimate API endpoints for structured data access.

Applications of List Crawling in SEO and Marketing

1. Competitor Analysis

Extract competitors’ web pages, meta tags, and backlink lists to understand their SEO strategies.

2. Keyword Discovery

Crawl SERPs (search engine results pages) to identify high-performing content and keyword clusters.

3. Link Prospecting

List crawling helps find link opportunities in niche directories, blogs, and forums.

4. Content Research

Marketers can crawl lists of trending articles or product categories for content planning.

5. Local SEO and Business Directories

Gather local business listings from platforms like Yelp or Google Maps to build citation profiles.

By combining list crawling with analytics, marketers gain powerful insights into audience behavior and market trends.

The Future of List Crawling

The future of list crawling is moving toward automation with intelligence. Emerging technologies are enhancing how data is collected, analyzed, and applied:

-

AI and Machine Learning – Automated pattern detection improves data accuracy.

-

Natural Language Processing (NLP) – Extracts semantic meaning from unstructured lists.

-

API-based Crawling – Reduces legal risk and increases efficiency.

-

Serverless Cloud Crawlers – Offer scalable, cost-efficient crawling infrastructure.

-

Data Ethics Frameworks – Establish fair standards for responsible data extraction.

In short, list crawling is evolving from basic data collection to intelligent, ethical data automation.

Conclusion

List crawling stands at the intersection of automation, analytics, and digital innovation. It empowers businesses to gather actionable insights from the vast online ecosystem — from SEO and marketing to research and e-commerce.

However, the power of list crawling must be balanced with ethical responsibility. By respecting privacy, terms of service, and data protection laws, organizations can harness the benefits of automation without compromising integrity or legality.

As data continues to fuel the modern economy, list crawling will remain one of the core technologies driving digital intelligence — smarter, faster, and more connected than ever.